Investigating Robot Learning of Quadrupedal Locomotion on Deformable Terrain

M.Sc. thesis - GPU-accelerated Isaac Sim workspace that couples Position-Based Dynamics (PBD) gravel simulation with a curriculum-driven PPO policy to achieve robust, energy-efficient locomotion across soft, uneven, and granular ground.

News (March 2025) – A preliminary extension of this framework was accepted as a short paper at the German Robotics Conference 2025 (GRC 2025).

Read the extended abstract here

Read the poster here

Download the thesis presentation (PPTX)

This project is the deliverable of my M.Sc. thesis at RWTH Aachen.

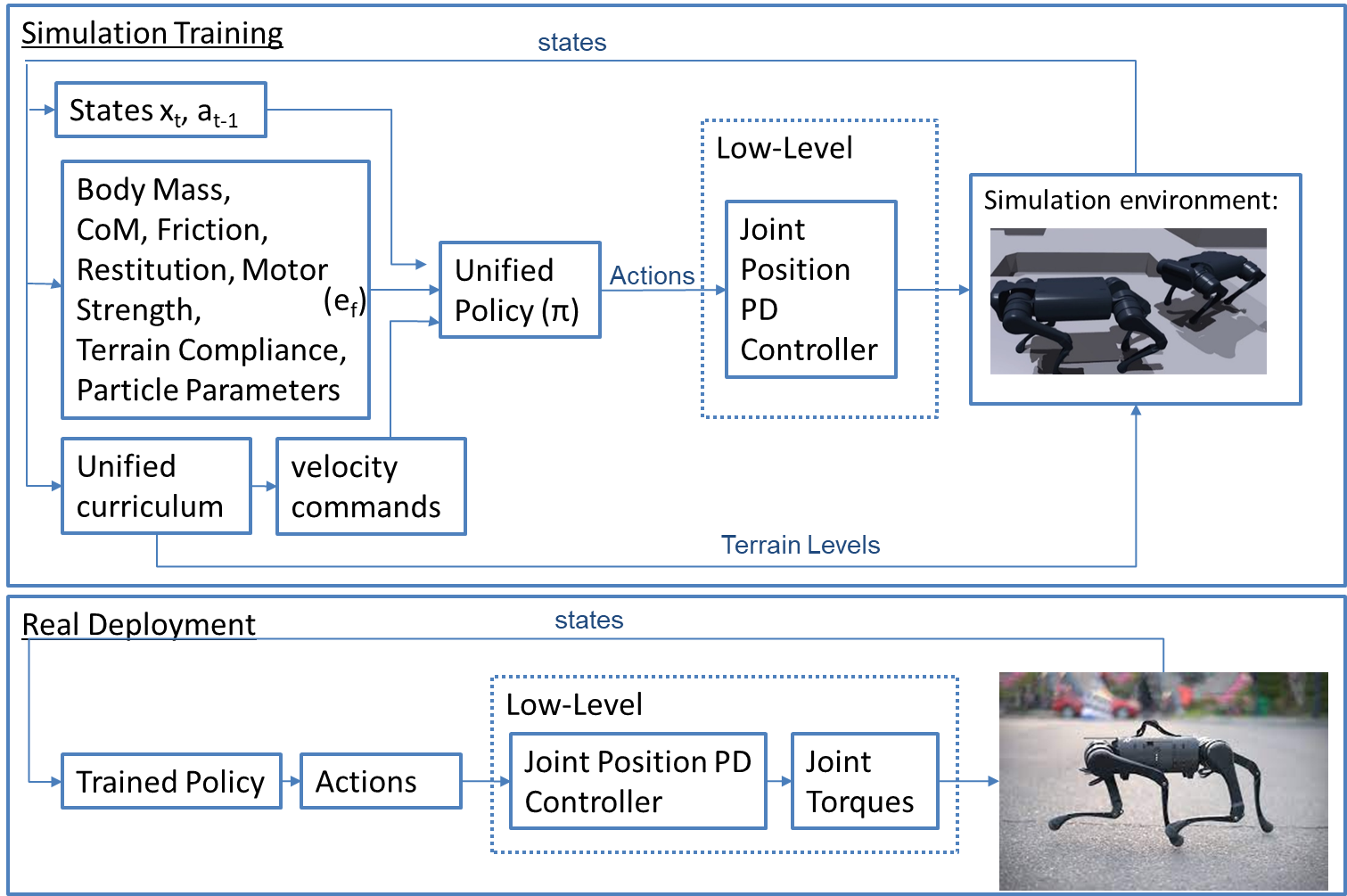

It packages an end-to-end pipeline—simulation, reinforcement-learning (RL), evaluation, and visualisation—for training quadruped robots to handle deformable terrain such as sand, gravel, and soft soil.

Built around NVIDIA Isaac Sim and OmniIsaacGymEnvs, the workspace brings together:

- Position-Based Dynamics (PBD) particles for real-time granular media.

- Massive-parallel Proximal Policy Optimization (PPO).

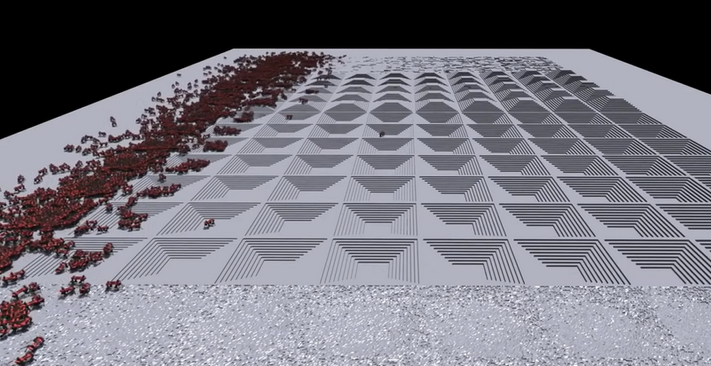

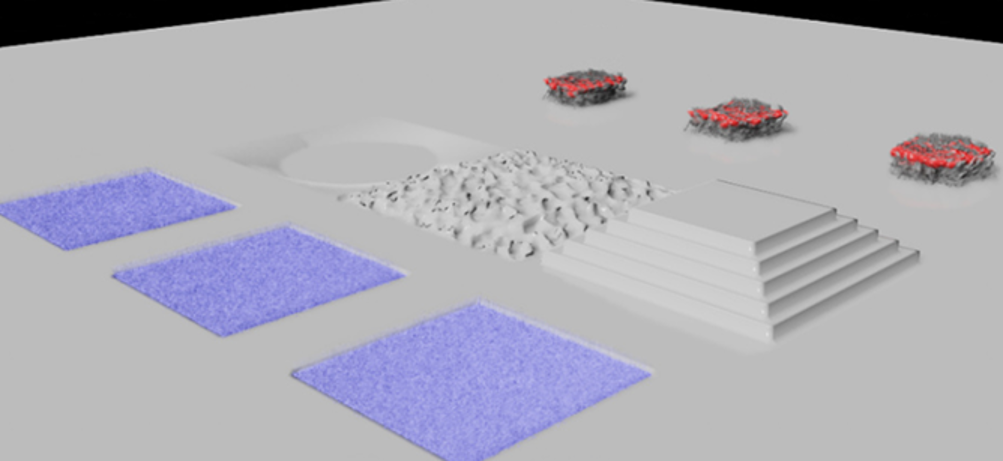

- An automatic terrain curriculum that graduates from rigid slopes to particle-filled depressions.

- Domain randomisation (friction, density, adhesion, external pushes) for sim-to-real transfer.

- Integrated metrics dashboards and helper scripts for reward-curve replay and inference video capture.

“The adoption of PBD allowed for a more accurate and computationally efficient simulation of granular interactions, facilitating real-time training and testing of RL policies.”

Motivation - Experiments

Methodology

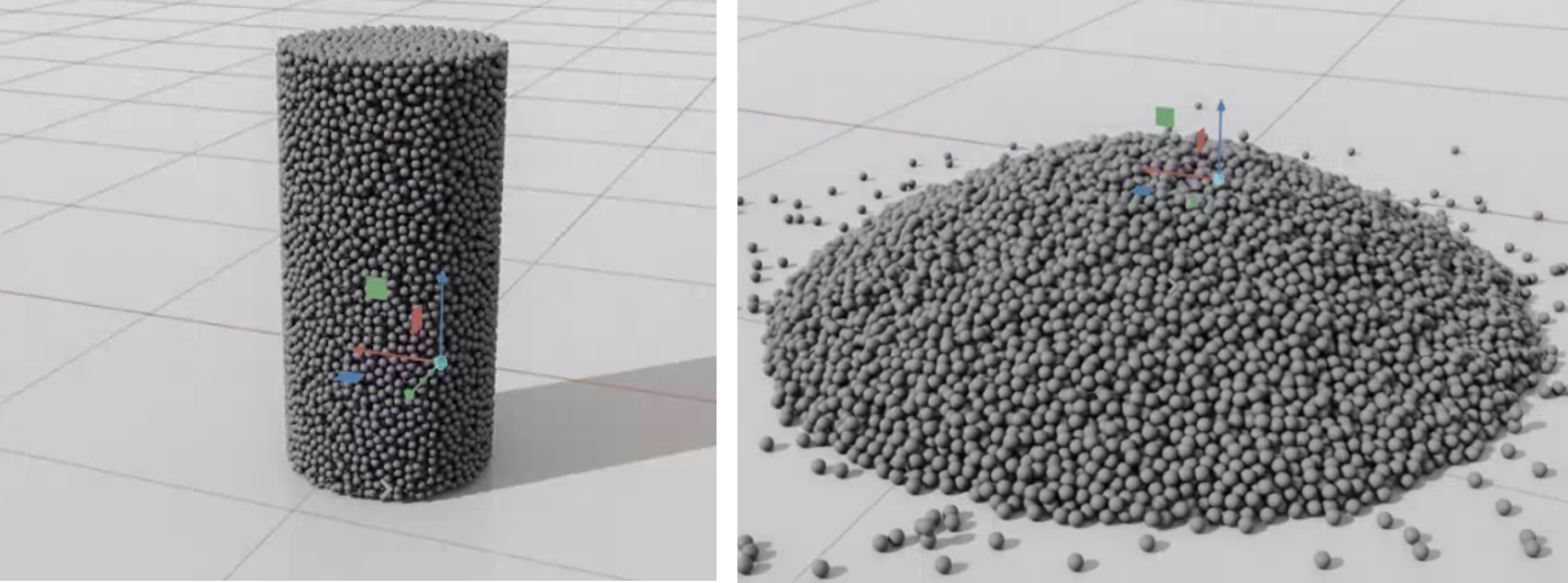

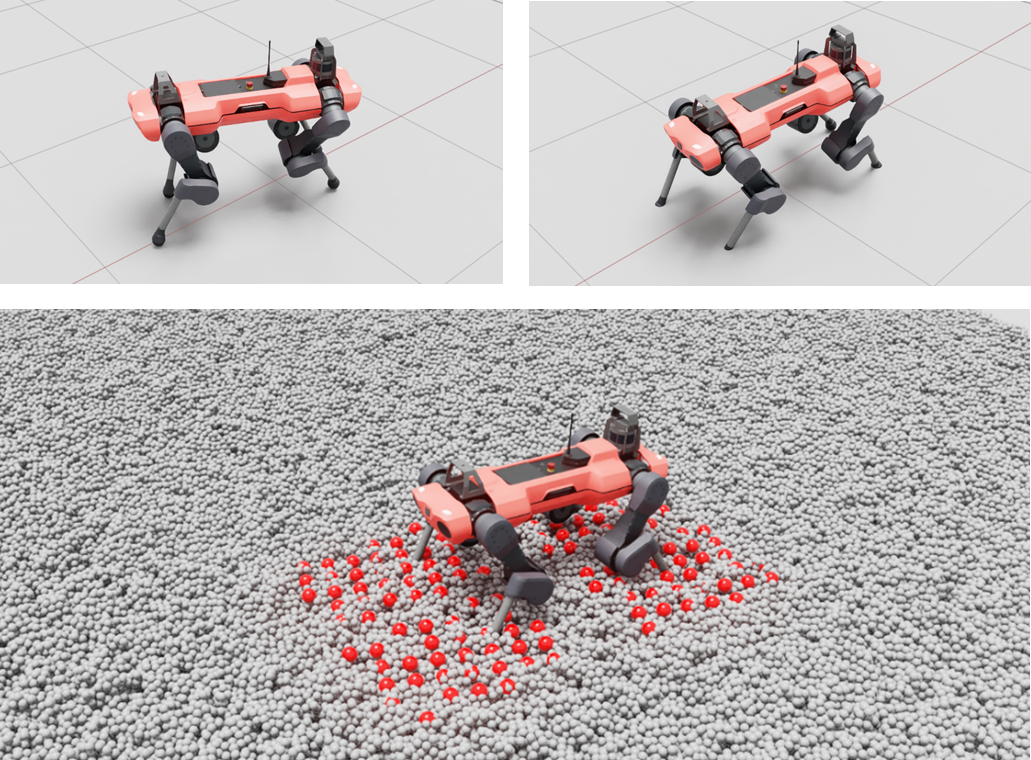

- Deformable-Terrain Simulator for locomotion – Spawns ∼200 k PBD particles inside mesh “depressions” in Isaac Sim, and refits BVH on-the-fly, with two-way robot-terrain contacts.

| Component | Details |

|---|---|

| State Vector (188 D) | Base lin/ang vel, gravity vec, 12 joint pos + vel, previous action, 140-cell height grid. |

| Action Space (12 D) | Joint-angle offsets; torques clipped to ±80 N m. |

| Rewards | Velocity tracking, torque/accel regularisers, stumble penalty, peak-contact penalty; airtime term disabled in Phase 2. |

- Two-Stage RL Curriculum –

- Phase 1: 2000 epochs on rigid terrain; velocity curriculum + airtime / collision / stumble / other rewards.

- Phase 2: gravel only; dynamic particle material properties randomisation every 20 s to boost policy generalization.

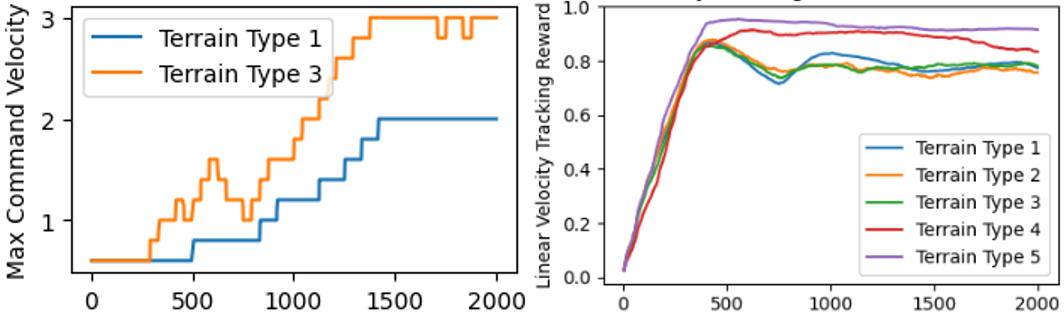

- Velocity-Aware Command Curriculum – Command ranges auto-scale when average reward > 80 % of max, enabling safe exploration without premature falls.

- Benchmark Replication – Re‑implemented the “Learning to Walk in Minutes” baseline in both Isaac Gym and Isaac Sim. Average episodic‑reward curves overlap within ±2 %, confirming that migrating to Isaac Sim’s richer GUI incurs no learning penalty.

Terrain Curriculum

- Rigid Section – Mix of slopes (±25 °), stairs (0.3 m × 0.2 m), and 0.2 m random obstacles.

- Granular Section – Central 4 × 4 m pit filled with 2 mm PBD spheres (ρ = 2000 kg m⁻³, μ = 0.35).

- Agents graduate when average episode reward exceeds threshold; otherwise regress, while preventing catastrophic forgetting.

Results Highlights

This project successfully trained a quadruped robot to walk on fully deformable terrain simulated with PBD particles in Isaac Sim. The final policy demonstrates a clear ability to maintain balance and achieve a stable trot across a gravel pit.

Compared to a baseline model, our two-phase training curriculum produced a significantly more energy-efficient and stable robot.

Phase 1 (Rigid Terrains): The initial training phase on rigid surfaces dramatically improved energy efficiency. The robot learned a smoother gait that reduced power consumption to nearly half that of the baseline, resulting in a Cost of Transport (CoT) that was over five times better. This shows the effectiveness of our reward structure in promoting efficient movement.

Phase 2 (PBD Gravel): Fine-tuning on granular terrain further refined the robot’s stability. While this required slightly more power than walking on flat ground, the robot became much more sure-footed. It exhibited tighter control over its body orientation and more precise joint tracking, leading to fewer balance errors and more accurate foot placement on the shifting gravel.

In short, the training pipeline produced a policy that is not only robust enough to handle deformable ground but also remarkably efficient and stable in its movements. 🤖

Major Limitation

Due to GPU memory/throughput constraints, we were unable to scale the granular-terrain simulations (PBD particle counts and domain size) beyond the presented setup. Consequently, the amount and diversity of deformable-terrain experience collected during training was limited. Additionally, Isaac Sim currently runs the PBD particle pipeline entirely on the CPU, which introduces a significant bottleneck for large-scale granular simulations. This restricts achievable frame rates and limits the practicality of training on more complex deformable terrains without distributed CPU resources.

Ongoing Work

- Cloud-scale simulation & training — Containerize the workspace and orchestrate Isaac Sim + PPO across multi-GPU cloud platforms to scale PBD particle counts/terrain size and expand experience collection.

- Terrain‑adaptive velocity curriculum for enabling high speed locomotion training

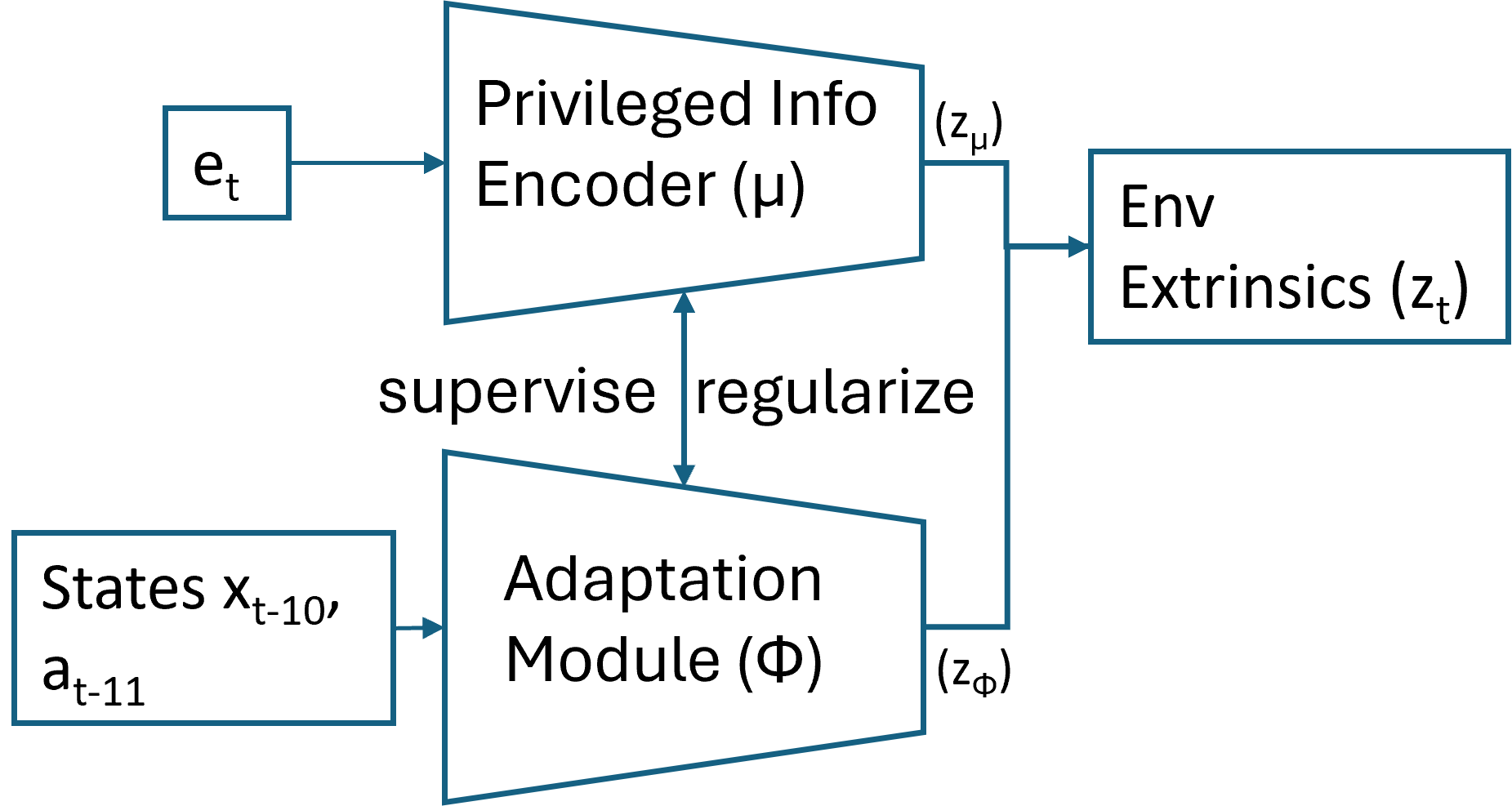

- Privileged student–teacher transfer and adaptation module for rapid sim-to-real adaptation.

- SAC + online adaptation to cut sample complexity on CPU-bound particle sims.