Peg-in-Hole Simulation & RL Platform

Internship at Fraunhofer IPA - 2

A sample-efficient framework for learning high-precision insertion:

a UR5e + Robotiq Hand-E gripper, full contact dynamics in MuJoCo, synchronized multi-camera vision, configurable classical controllers, and an end-to-end RLPD-style training pipeline.

See also:

Modular Control Pipeline for Real UR5e Manipulator

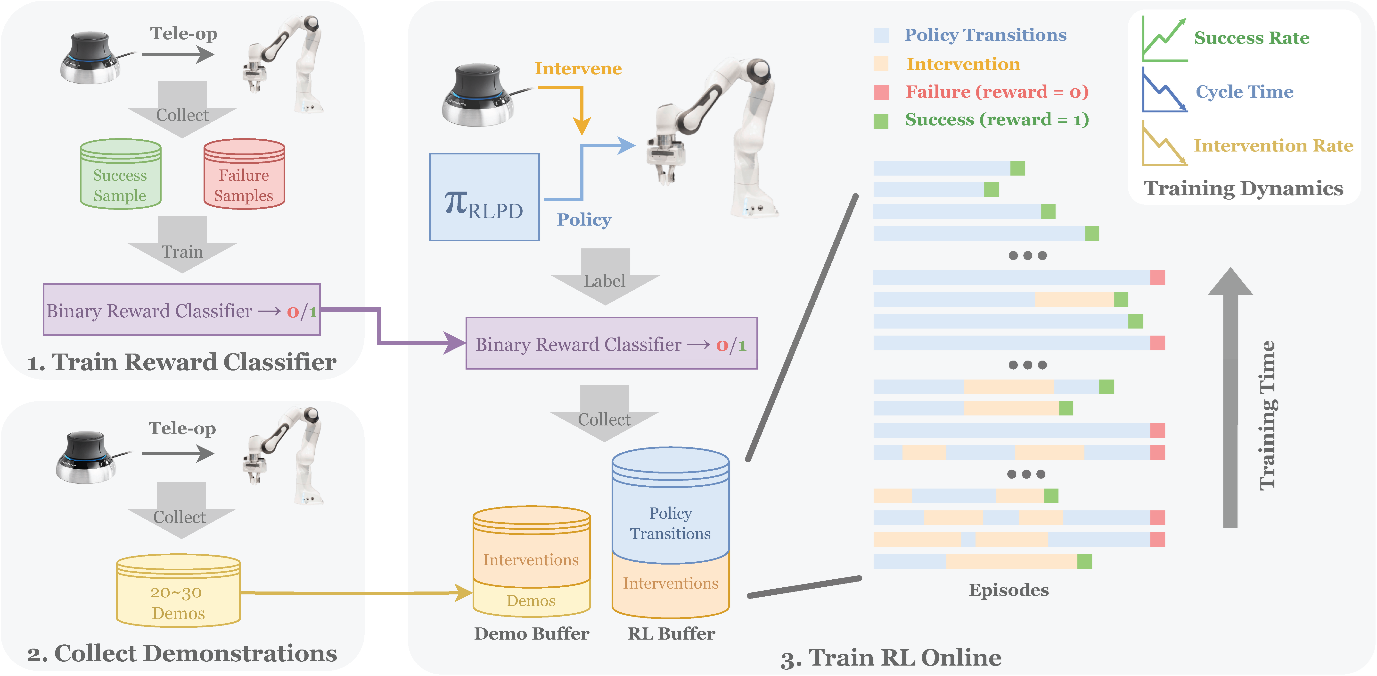

RLPD-Style Training Pipeline

- Pipeline design inspired by the HIL-SERL framework, enabling human-in-the-loop interventions during training.

- Replay buffer mixes expert demos with online rollouts, supports prioritized & uniform sampling.

- JAX/Flax Ensemble SAC learner with convolutional encoders for images and FC nets for states.

- Actor–Learner split enables asynchronous collection; Docker image ships with GPU + virtual-display support.

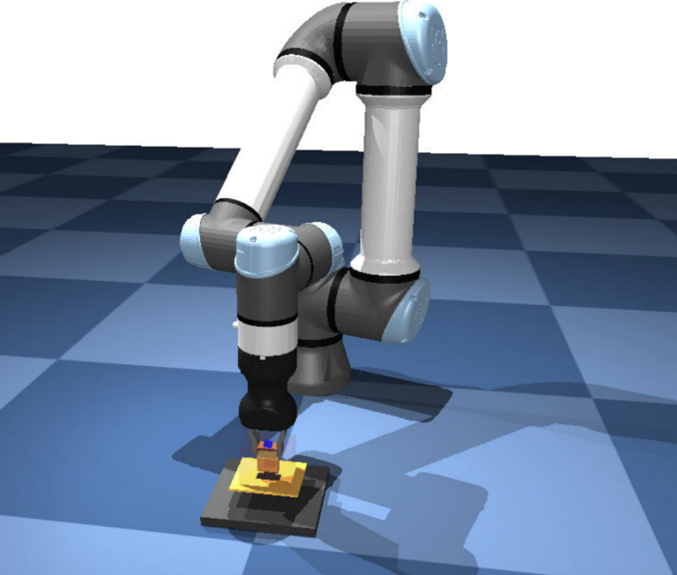

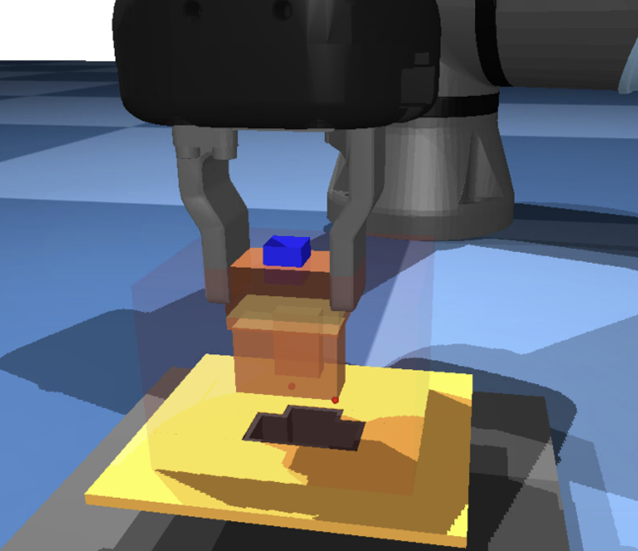

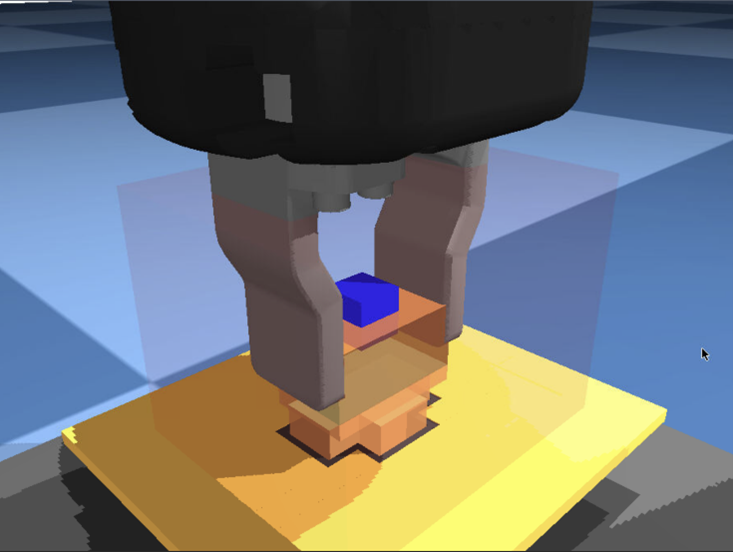

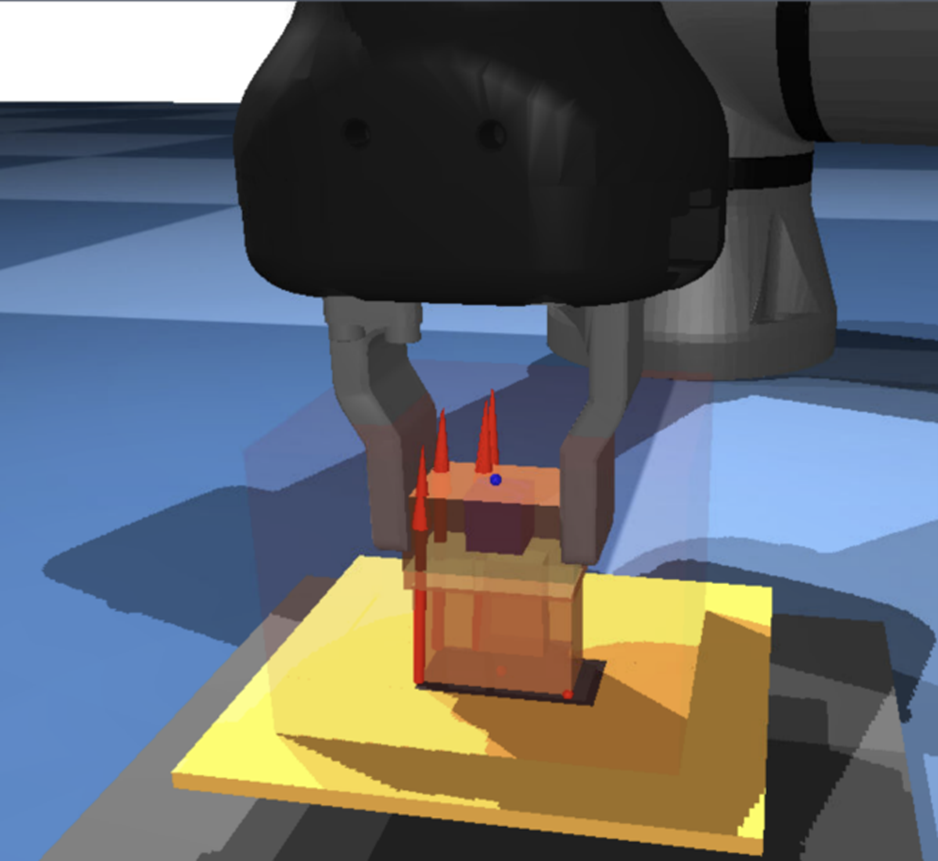

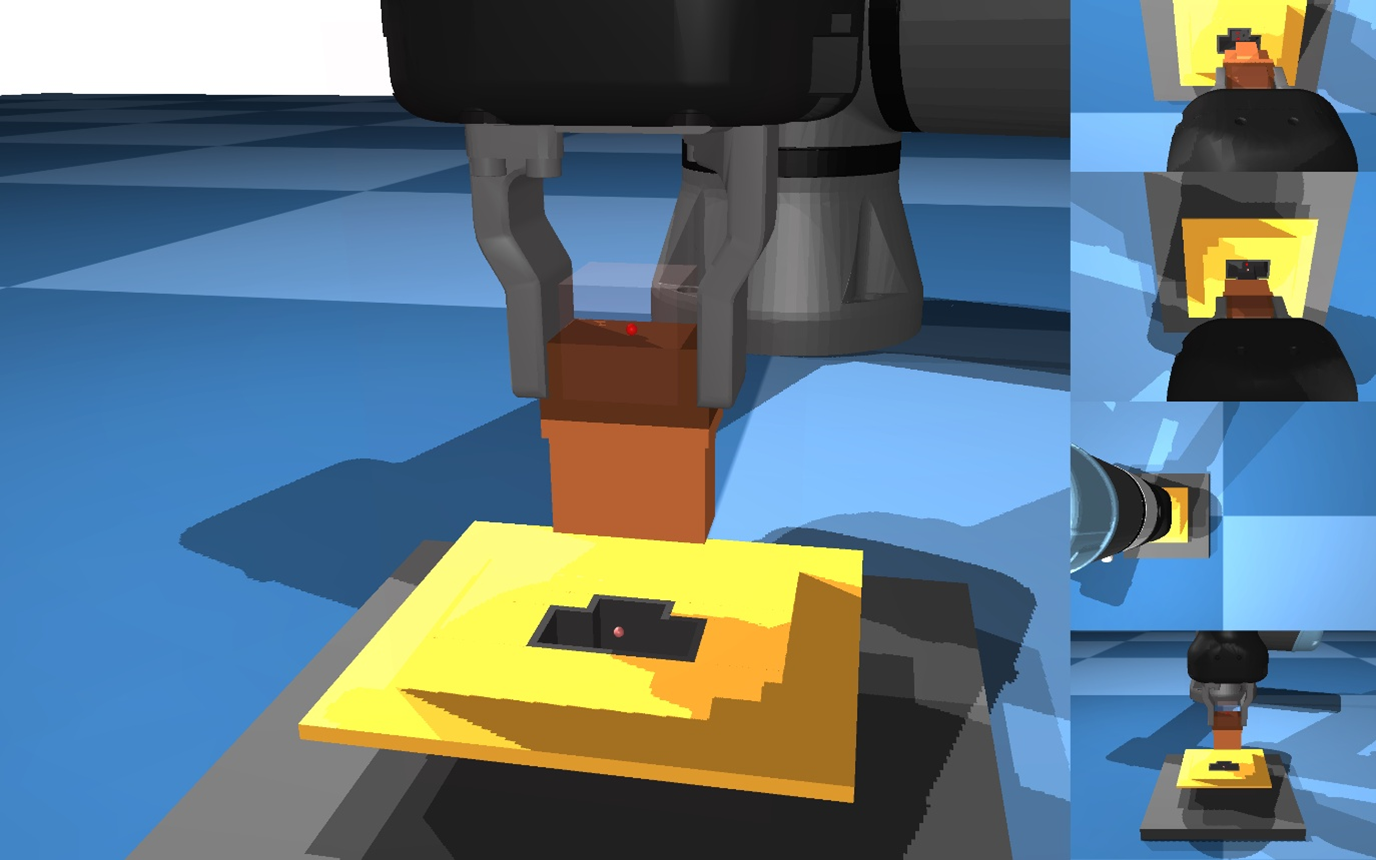

1 Simulation Environment

- MuJoCo scene with calibrated UR5e arm, Hand-E gripper, male connector, and a female port.

- Realistic inertia, joint limits, fingertip collisions, and contact sensors.

- Plug-and-play Gymnasium interface, difficulty presets, headless/off-screen rendering, and Docker packaging for reproducibility.

1.1 Difficulty Presets

The environment ships with three ready-to-use difficulty presets so you can benchmark algorithms under progressively tougher conditions without touching low-level code.

| Level | Scenario Highlights | Use-Case |

|---|---|---|

| Easy | Fixed port orientation, modest XYZ randomization, and a rich observation vector (TCP pose, velocity, force-torque, connector & port poses). | Rapid prototyping & controller tuning. |

| Medium | Adds in-plane & vertical port shifts plus reduced observations; the agent still sees force-torque and connector pose but must cope with larger spatial variability. | Early learning-from-pixels or demo-bootstrapped RL experiments. |

| Hard | Full 6-DoF port orientation randomization and a minimalist observation set (no connector or port pose), stressing contact sensing and visual feedback. | Final performance evaluation & sim-to-real robustness tests. |

Switching level is as simple as selecting the corresponding Gymnasium ID in your training script, everything else (rendering, logging, reward structure) stays consistent.

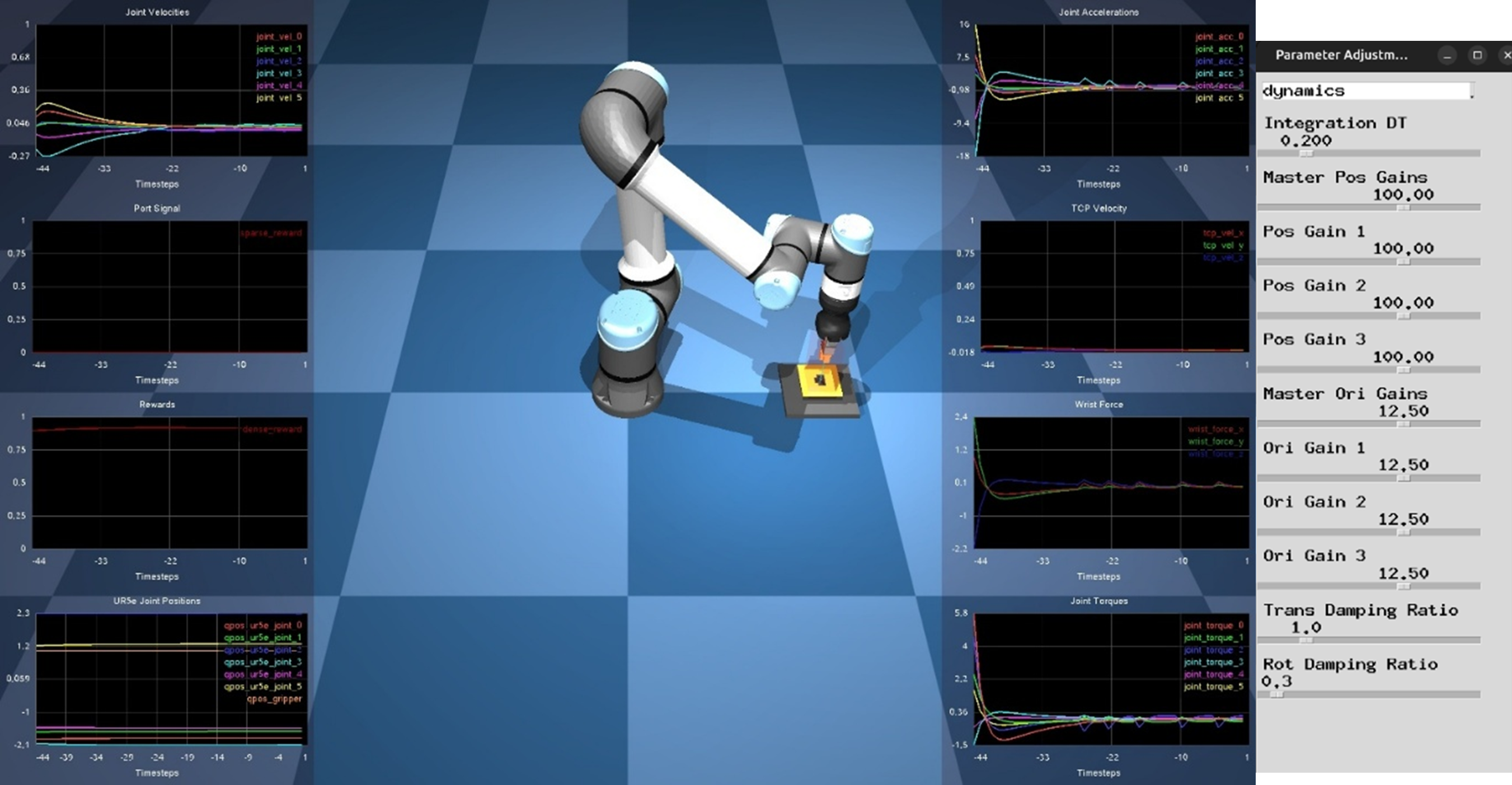

2 Modular Control Stack

- Configurable PD/Impedance controller with inverse-dynamics, pseudo-inverse, and Jacobian-transpose modes.

- Real-time safety: joint, velocity & acceleration clipping, workspace envelope.

- Live-tunable gains via a lightweight Qt UI with sliders and force-vector overlay.

3 Interactive Tele-op & Data Capture

- SpaceMouse and keyboard drivers for human-in-the-loop manipulation.

- Synchronized RGB video & state logs, plus scripted rollout presets for reproducible demonstrations.

- Automatic test-suite validates every Docker build with a short rollout and frame dump.

4 Vision Observations

Two virtual cameras—front-view & wrist—deliver 640 × 480 RGB frames each timestep, alongside proprioceptive state.

Wrappers support frame stacking, normalization, and video logging.

Takeaways & Next Steps

- Reproducibility first: Docker, tests, and clear config dictionaries cut onboarding time to minutes.

- Data matters: 20 high-quality demos were enough to bootstrap RL. Tried behaviour cloning with privileged state observations; performance improved notably when demonstrations were collected with the SpaceMouse instead of heuristic controllers or keyboard input.

- Introducing DAgger boosted performance further, especially in medium/hard presets where compounding errors are common.

- Future Work: Train RL policy with interventions for quick convergence and test the entire framework on real robot

References

[1] J. Luo et al., ‘SERL: A Software Suite for Sample-Efficient Robotic Reinforcement Learning’, Feb. 12, 2024, arXiv: arXiv:2401.16013. Accessed: Jul. 04, 2024. [Online]. Available: http://arxiv.org/abs/2401.16013

[2] J. Luo, C. Xu, J. Wu, and S. Levine, ‘Precise and Dexterous Robotic Manipulation via Human-in-the-Loop Reinforcement Learning’.