Modular Control Pipeline for Real UR5e Manipulator

Internship at Fraunhofer IPA - 1

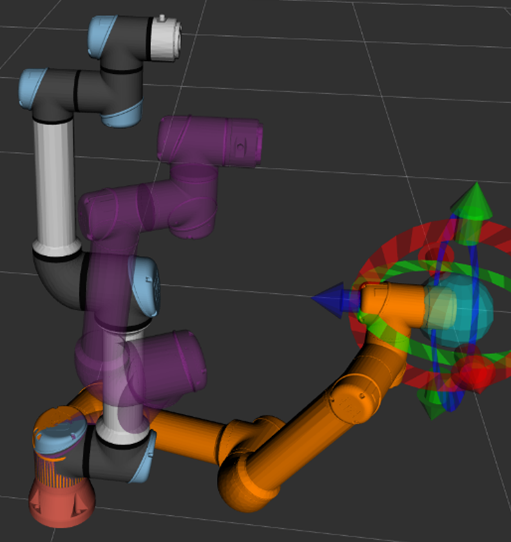

This work was developed in tandem with a high-fidelity simulation platform (see Peg-in-Hole Simulation & RL Platform), which allowed us to safely prototype and benchmark RL policies in a controlled, reproducible setting before deploying them to the real robot. The real-world setup described here forms the final stage of that pipeline — enabling online RL with direct sensor feedback once a policy has passed simulation validation.

Building a trustworthy robotic manipulation pipeline means much more than writing motion‑planning code – it requires repeatable environments, reliable sensing, and tight feedback loops. As I started my internship at Fraunhofer IPA, I designed and validated a full stack that takes a UR5e from a clean Docker image all the way to real‑time, online reinforcement‑learning (RL) control.

The journey broke down into four pillars that together form a cohesive project:

- Deterministic Workspace – a ROS + Docker baseline that anyone can spin‑up in minutes.

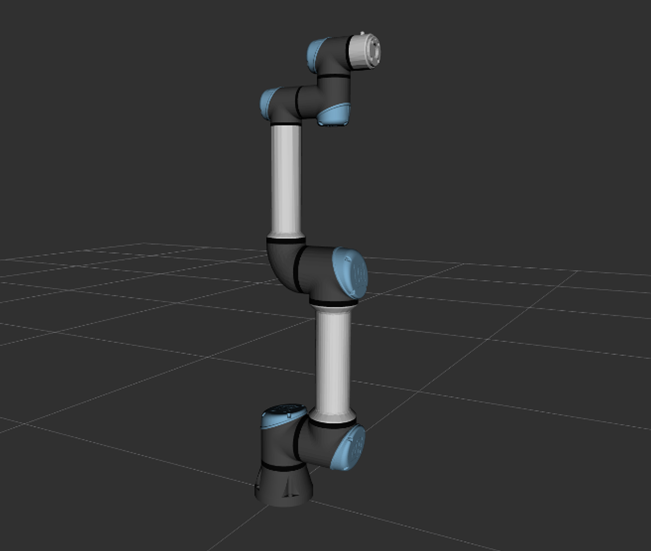

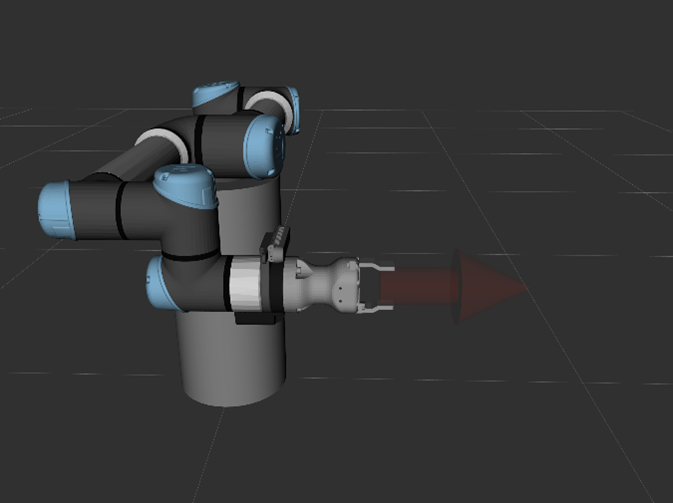

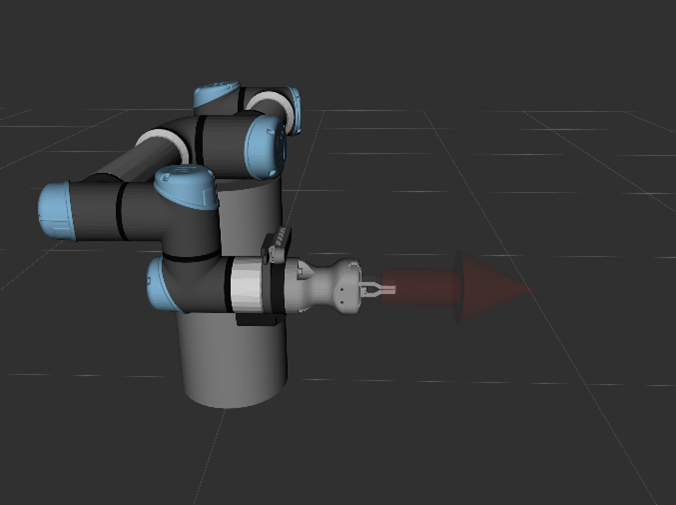

- High‑Fidelity Simulation – RViz & Gazebo models (including Robotiq Hand-E gripper integration) tuned to match the physical arm’s kinematics, joint limits, and gripper dynamics.

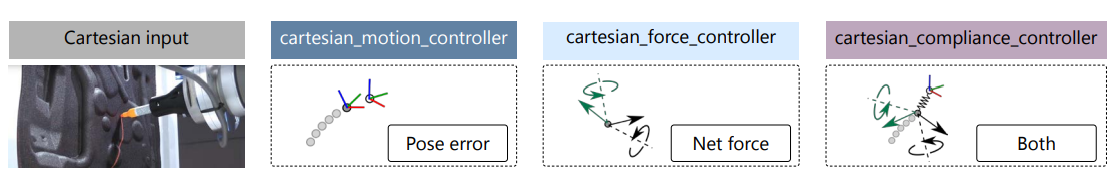

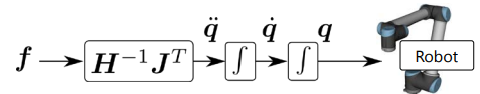

- Advanced Cartesian Control – Direct task space control using compliant, force‑controlled motion delivered through a hot‑swappable controller suite.

- RL Experiment Server – a lightweight Flask bridge that streams observations and actions at 500 Hz.

- Modular Hardware Abstraction – self-contained packages for the arm, gripper, cameras, and teleoperation modules (keyboard & 6-DoF SpaceMouse) that plug into the same control API.

1 Deterministic Workspace

A single docker compose up brings in ROS Noetic, MoveIt!, and all required UR/Robotiq drivers. Sub‑modules ensure controller, gripper, and sensor firmware stay in sync. Automated linting & formatting keep the codebase clean no matter who contributes.

2 High‑Fidelity Simulation

Precise URDF tweaks and an updated IKFast solver shaved planning timeouts from seconds to milliseconds while maintaining millimetre‑level accuracy. The simulator now reflects joint‑limit envelopes exactly, preventing unpleasant surprises once code is deployed to hardware.

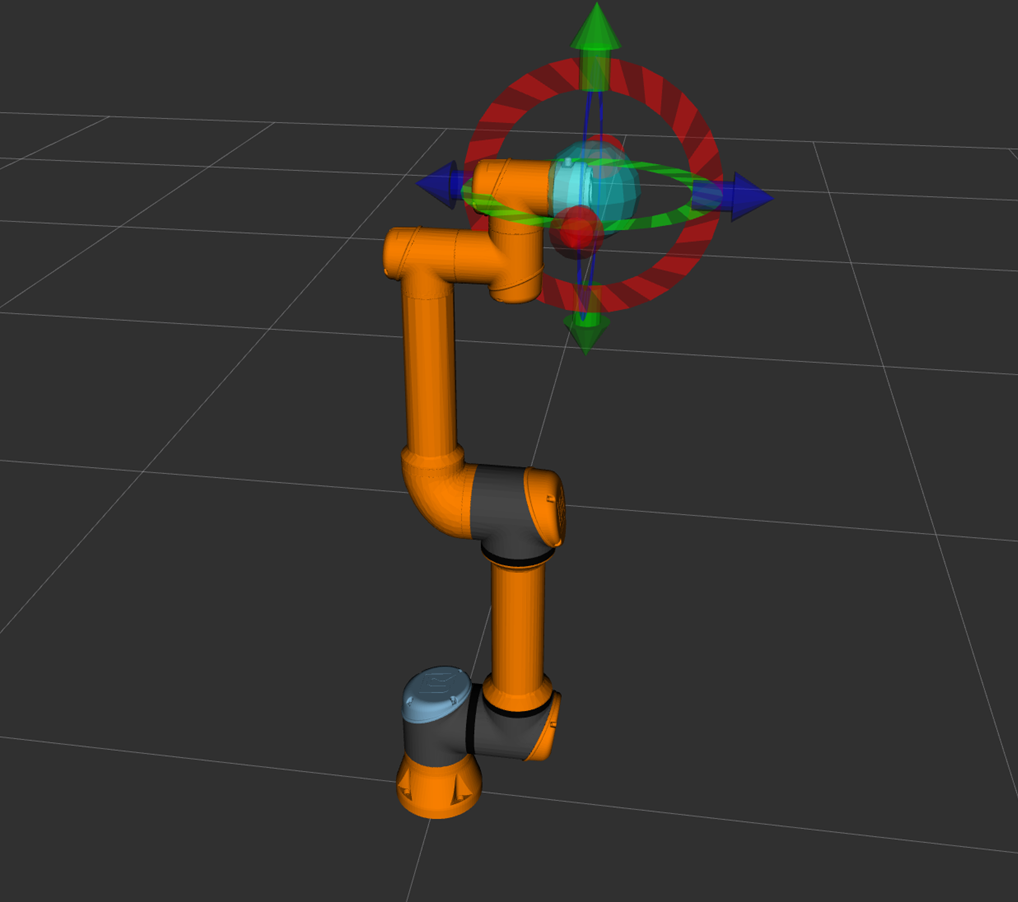

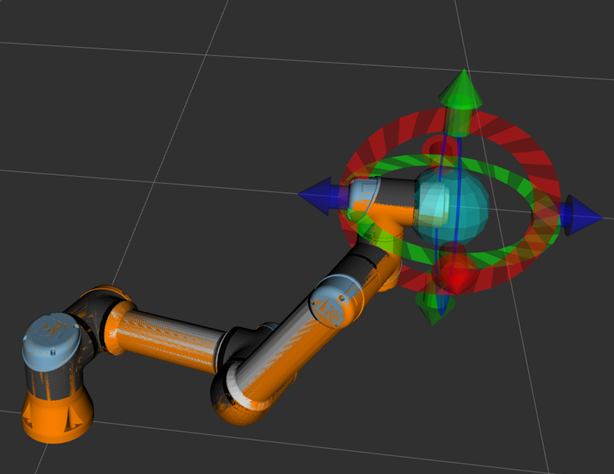

3 Advanced Cartesian Control

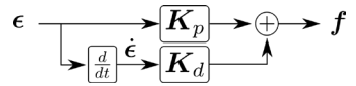

To enable real-time, closed-loop policies in reinforcement learning — where actions depend on instantaneous force and pose feedback — we moved from high-level, preplanned MoveIt! trajectories to external Cartesian controllers that allow direct velocity and force commands control at 500 Hz in this instance using UR5e.

Swapping from high-level MoveIt! planning to the open-source FZI Cartesian Controllers unlocked force-aware and compliance behaviours from feedbacks. Real-time force–torque feedback, low-pass filtered at 50 Hz, lets the arm glide across surfaces with constant contact force or actively comply to external pushes.

4 RL Experiment Server

A lightweight Flask micro-service wraps the entire UR5e + Hand-E stack, exposing every control primitive as simple REST calls.

- State streaming – endpoints such as

/getstate,/getpose,/geteefvel,/getforce, and/getjacobianpublish joint angles, end-effector pose/velocity, Jacobians, and six-axis wrench data as NumPy-serialised JSON. - Action ingestion –

/pose,/move_gripper, and other setters consume position/orientation targets, Cartesian twists, or discrete gripper commands generated by the learner. - On-the-fly reconfiguration – dynamic-reconfigure routes allow real-time tuning of solver, stiffness, forward-dynamics, and per-axis PD gains without restarting the controller.

By decoupling learning and actuation across machines, this server turns the UR5e rig into a plug-and-play RL testbed: swap in any algorithm that can speak HTTP + JSON and start experimenting.

5 Modular Hardware Abstraction

The real-robot codebase is split into distinct packages for each device class:

-

arm– implementsUR5eRealServerwith IK solvers and Cartesian motion utilities. -

gripper– providesRobotiqGripperServerSimand other servers exposing open/close/move commands. -

cameras– houses capture utilities for ZED, RealSense, and USB cameras. -

devices– handles operator input via keyboard or 6-DoF SpaceMouse controllers.

Shared helpers under utils keep transformations and ROS messaging consistent across modules. Each component registers with the Flask API so new sensors or tools can be added with minimal glue code.

Next Steps

The stack is already powering new research in contact‑rich manipulation. Future plans include:

- Add a camera to the URDF for integrated vision.

- Port the entire stack to ROS 2 for future-proofing.

- Test the updated system’s compatibility with RL workflows.

Key Takeaways

- Reproducibility first. A single, version‑pinned Dockerfile saved countless setup hours.

- Incremental testing beats big leaps. Small, instrumented experiments revealed issues early.

- Modularity pays off. Swapping planners, controllers, and RL algorithms became trivial thanks to clean interfaces.

If you’d like to reproduce the results or build on this foundation, all code is open‑source.GitHub repository.

Feel free to open an issue or pull request – collaboration is welcome!